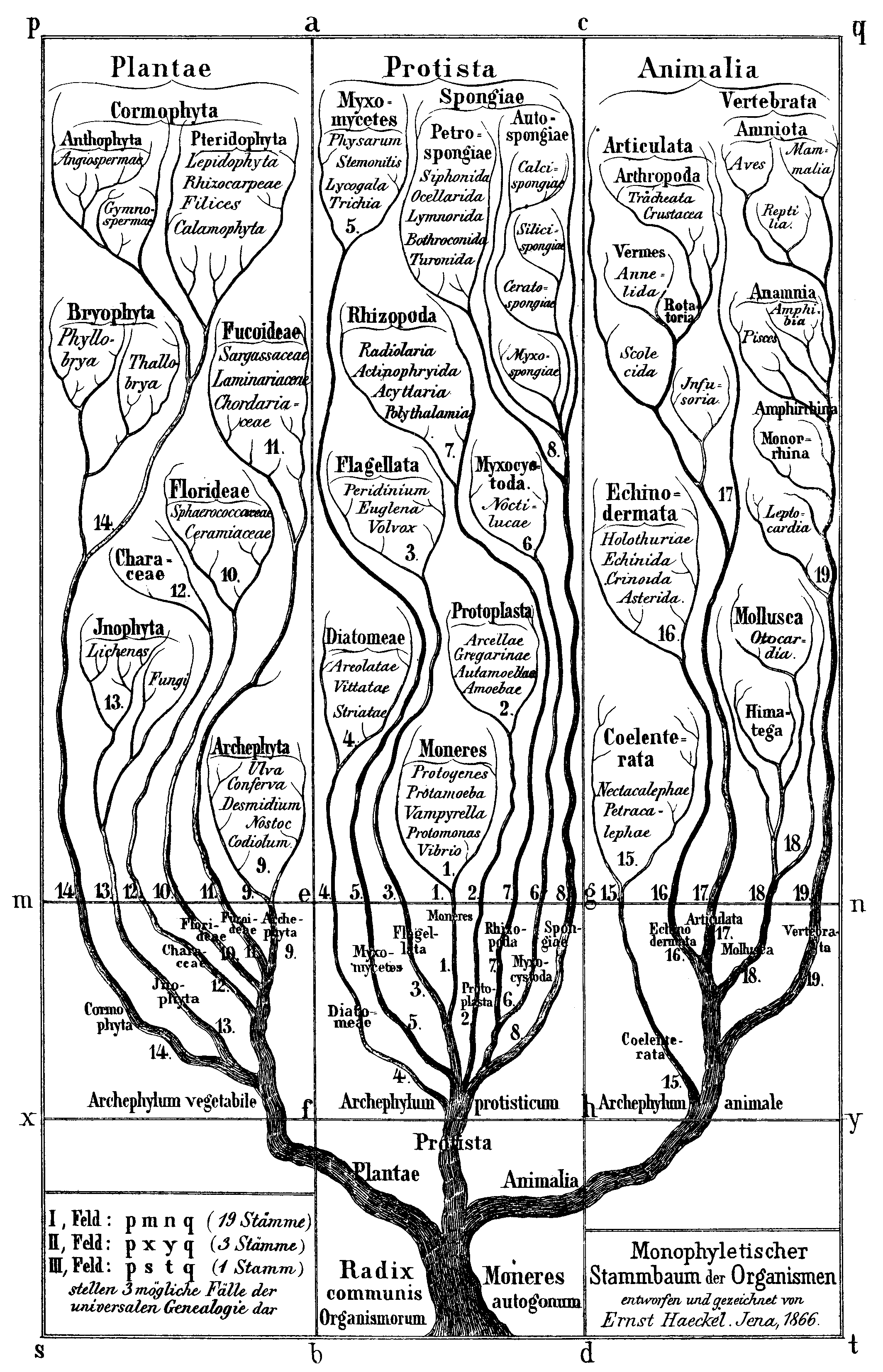

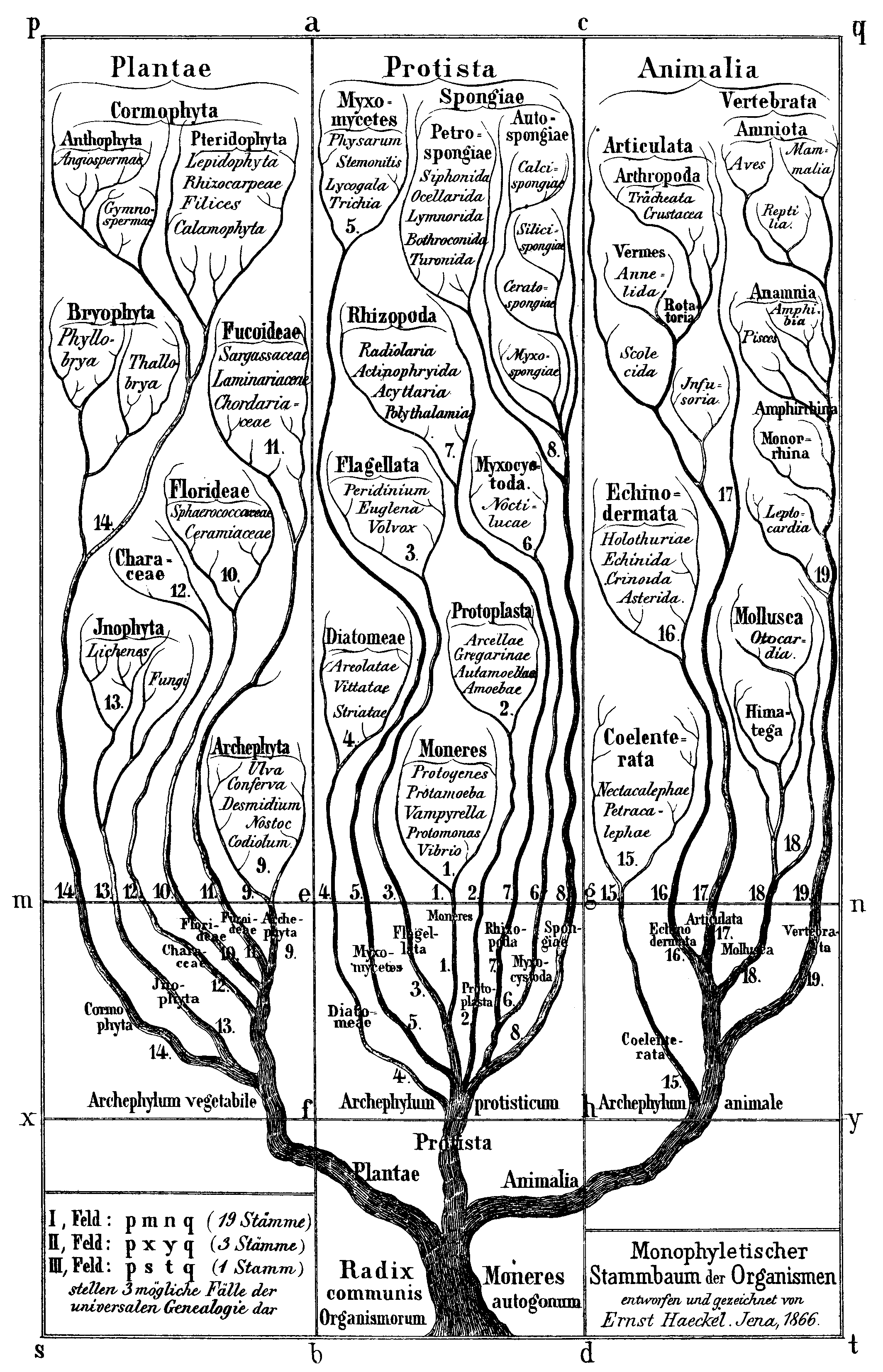

Help us build a 'Tree of Life' for Weird Machines!

|

What if we could look at classes of exploitable vulnerabilities

as sharing a common origin like biological species with their

clades, phyla, classes, etc.? Understanding common origins

is key in natural sciences -- why not in hacking?

We know many different species of exploitable vulnerabilities in just

about anything that has some chips in it, and ways to exploit them

look similar -- e.g., a digital radio PHY layer bug can be exploited

exactly like SQLi. Why is it that we find the same weird machines in

system layers that are so different?

To understand the origins of exploitable vulnerability species, we

need a cladistic/genetic classification of weird machines. We need

a Voyage of the Beagle to collect enough specimen of weird machines

to read the story of the origin of these species.

|

|

Bring us your favorite specimen of a weird machine, help us build the

Beagle!

Background: Weird Machines

Weird machines are Turing's revenge on programmers who put their faith

in abstractions and refused to learn how their machines actually

worked. It took decades after von Neumann to drive home the idea that

actual computers can be reliably programmed with crafted input data to

perform much more powerful computations than programmed for.

Finding and describing classes of weird machines is the hacker

community's great contribution to the sum of human understanding of

what computing is about. But what makes a piece of code turn into

a cog of a weird machine?

Weird machines are born of ad-hoc input parsers (including those in

network protocol stacks, which are not treated as parsers but should

be) and too complex and ambiguous input languages. The disparity

between the input recognizer/validator and the actual input language

creates the explosion of "borrowable" states and state transitions

that makes up the weird machine, which is then instantiated and

programmed by the crafted input exploit program.

How can we spot and classify this "borrowable" weird computing power?

How much paranoia when dealing with input-affected bytes is enough to

avoid weird state borrowing-based programming? We need to understand

all the ways it arises, and see into their common origins. Then their

cladistics will come to light, and will help us

cure the pain of C/C++ and other

kinds of cog-ridden programming.